- Voorbereiding

- Hallo wereld

- Extern publiceren

- Persistente volumes

- Geautomatiseerd uitrollen

- Applicatie publiceren

VMWare

VMware Tanzu Container Platform

High Level Architecture

Author: Dieter Hubau, Senior Solution Engineer - 25.05.2021 - dhubau@vmware.com

Overview

VMware Tanzu simplifies operation of Kubernetes for multi-cloud deployment, centralising management and governance for many clusters and teams across on-premises, public clouds and edge.

It delivers an open source aligned Kubernetes distribution with consistent operations and management to support infrastructure and app modernisation.

This document will lay out the architecture related to the VMware Tanzu platform we are proposing for De Vereniging van Nederlandse Gemeenten (VNG) and offers a high-level overview of the different components.

Editions

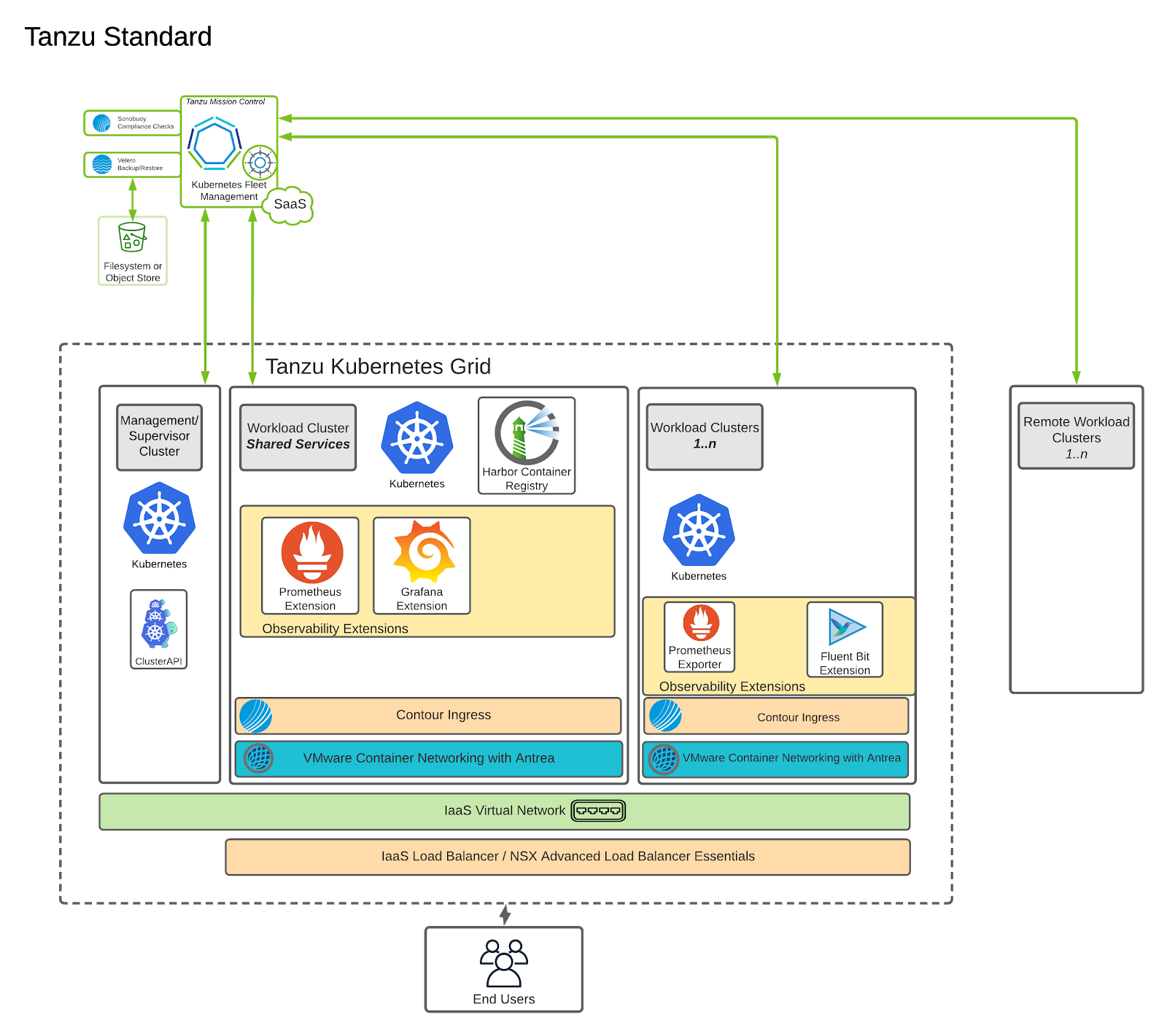

Tanzu Standard

The following is a list of the components that comprise Tanzu Standard:

-

Tanzu Kubernetes Grid (TKG) - Enables creation and lifecycle management of Kubernetes clusters.

-

Contour Ingress Controller - Provides Layer 7 control to deployed HTTP(s) applications.

-

NSX Advanced Load Balancer (ALB) Lite - Provides Layer 4 Load Balancer support, recommended for vSphere deployments without NSX-T, or when there are unique scaling requirements.

-

Harbor Image Registry - Provides a centralized location to push, pull, store, and scan container images used in Kubernetes workloads. It also supports storing many other artifacts such as Helm charts and includes enterprise grade features such as RBAC, retention policies, automated garbage collection of stale images, and Dockerhub proxying among many other things.

-

Tanzu Mission Control - Provides a global view of all Kubernetes clusters, wherever they may be running, and allows for centralized policy management across all deployed and attached clusters.

-

Observability Extensions:

- Fluentbit - provides export log streaming of cluster & workload logs to a wide range of supported aggregators provided in the extensions package for TKG.

- Prometheus - provides out-of-the box health monitoring of Kubernetes clusters.

- Grafana - provides monitoring dashboards for displaying key health metrics of Kubernetes clusters.

Tanzu Standard puts all these components together into a coherent solution as shown below:

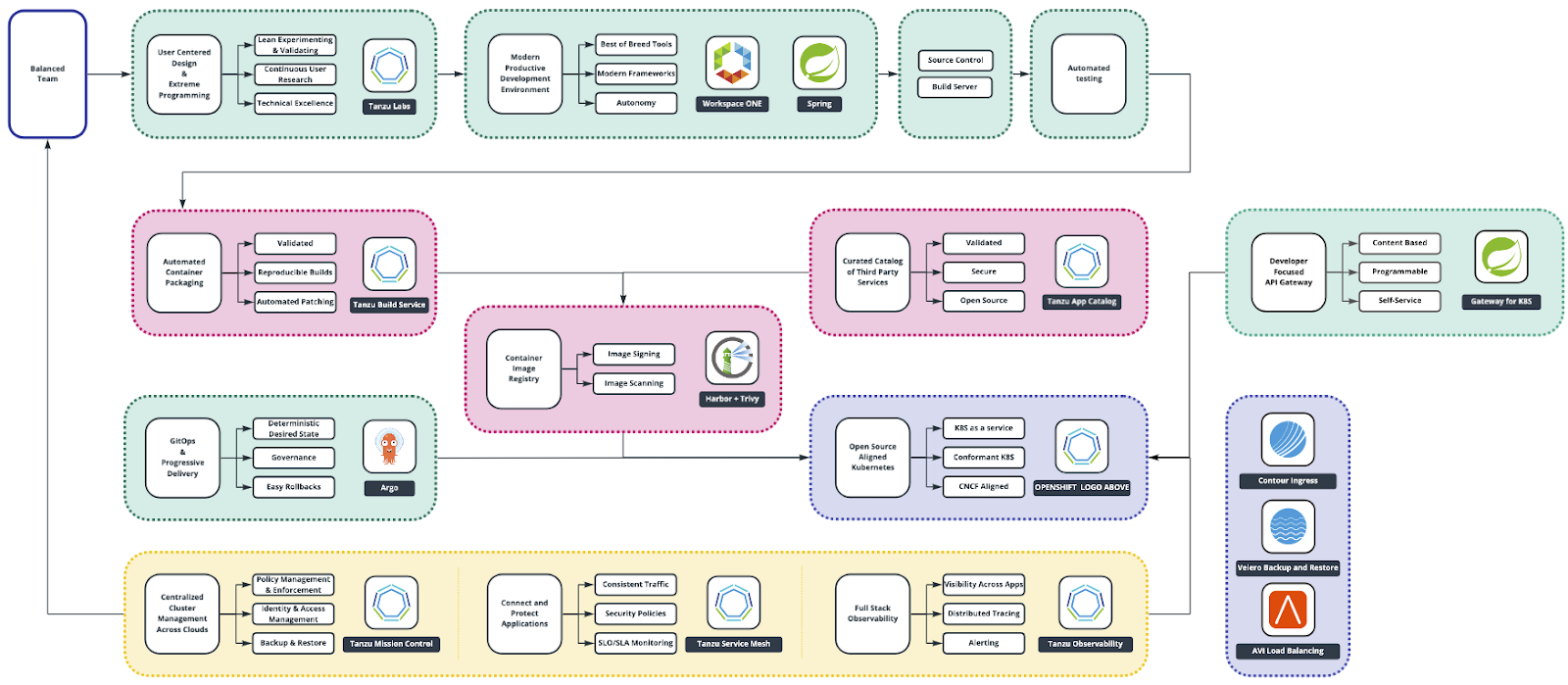

Tanzu Advanced

The components from Tanzu Standard are further enhanced by the additional capabilities in Tanzu Advanced:

-

Tanzu Service Mesh - Provides a Layer 7 global control plane to connect workloads securely within or across Kubernetes clusters, wherever they may be deployed. Global Namespace can be used to leverage the power of the hybrid cloud.

-

NSX Advanced Load Balancer (ALB) Enterprise - Full capabilities of the Advanced Load Balancer including web application firewalling, weighted routing, etc.

-

Tanzu Observability - a comprehensive SaaS service capable of ingesting metrics from a multitude of sources, providing ways to query and visualize those metrics in premade or custom dashboards, and allowing the user to configure alerting and perform root cause analysis of problems in any layer in the stack, whether it be infrastructure, Kubernetes or the application layer.

-

Tanzu Application Catalog - a suite of 100+ open source, third party packages that have been curated, secured and packaged, ready to be consumed from an up to date catalog, and to be deployed on any vanilla Kubernetes distribution. Bill of materials, security scanning and functional testing reports are provided to ensure customers can satisfy any regulatory requirements.

-

Tanzu Build Service - a Kubernetes native solution, built on open source, that provides the developers a way of automating the packaging of their applications into a secure, validated container image. Patching, updating and signing of these images can be fully automated across many teams and applications.

-

VMware Spring Runtime - Enterprise grade support package for Java, Tomcat, Spring, including a developer friendly API gateway, fully optimised for Kubernetes and used for SSO, TLS termination, request based routing, etc.

-

API portal - Developer friendly, decentralised API Portal where development teams can publish their APIs and group them in Business Domains. This API portal can automagically discover all APIs published by multiple API Gateway instances, supports the OpenAPI standard and aggregates all APIs in one single pane of glass.

-

Tanzu SQL - access to curated images for Highly Available (HA) MySQL and Postgres database, including container images, Helm charts and Kubernetes Operators to manage and upgrade database instances.

These capabilities are providing three major benefits to an enterprise IT organization:

-

Set up a fully automated Path to Production through the use of a DevSecOps CI/CD pipeline. We believe that every code commit from a developer that passes through the complete pipeline should be a viable candidate for production.

-

Observability across the complete stack:

-

The Application Operations (Dev) roles get the ability to proactively monitor the applications and do deep root cause analysis when an issue arises.

-

The Platform Operations (Ops) get a simplified, consistent view on all the layers of the IT stack, from virtualisation, Kubernetes and containers to the network and storage.

-

-

Simplify Day Two Operations: upgrading of the platform, patching of operating systems, scaling out clusters, patching container images are all automated processes and will reduce manual work and mistakes on the Operations side.

The picture below shows an example of a possible Path to Production using the components described in Tanzu Advanced.

Tanzu Kubernetes Grid

Overview

The Tanzu Kubernetes Grid (TKG) solution allows you to create and manage ubiquitous Kubernetes clusters across multiple infrastructure providers using the Kubernetes Cluster API.

TKG functions through the creation of a Management Kubernetes cluster which houses Cluster API. The Cluster API then interacts with the infrastructure provider to service workload Kubernetes cluster lifecycle requests.

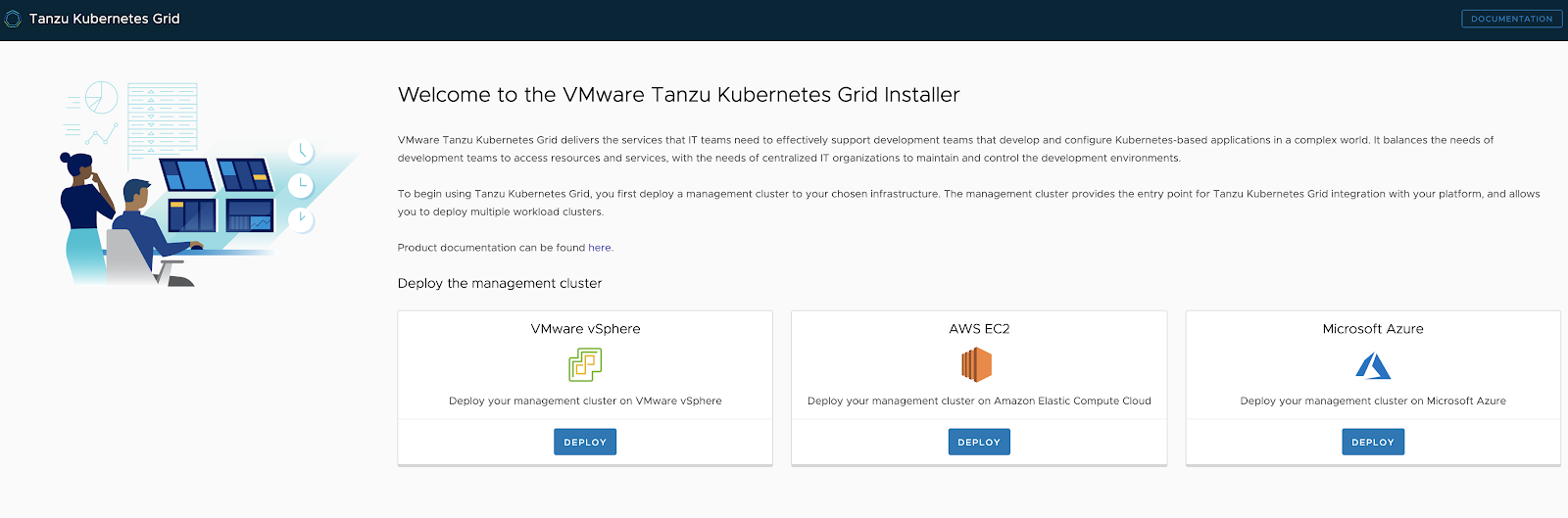

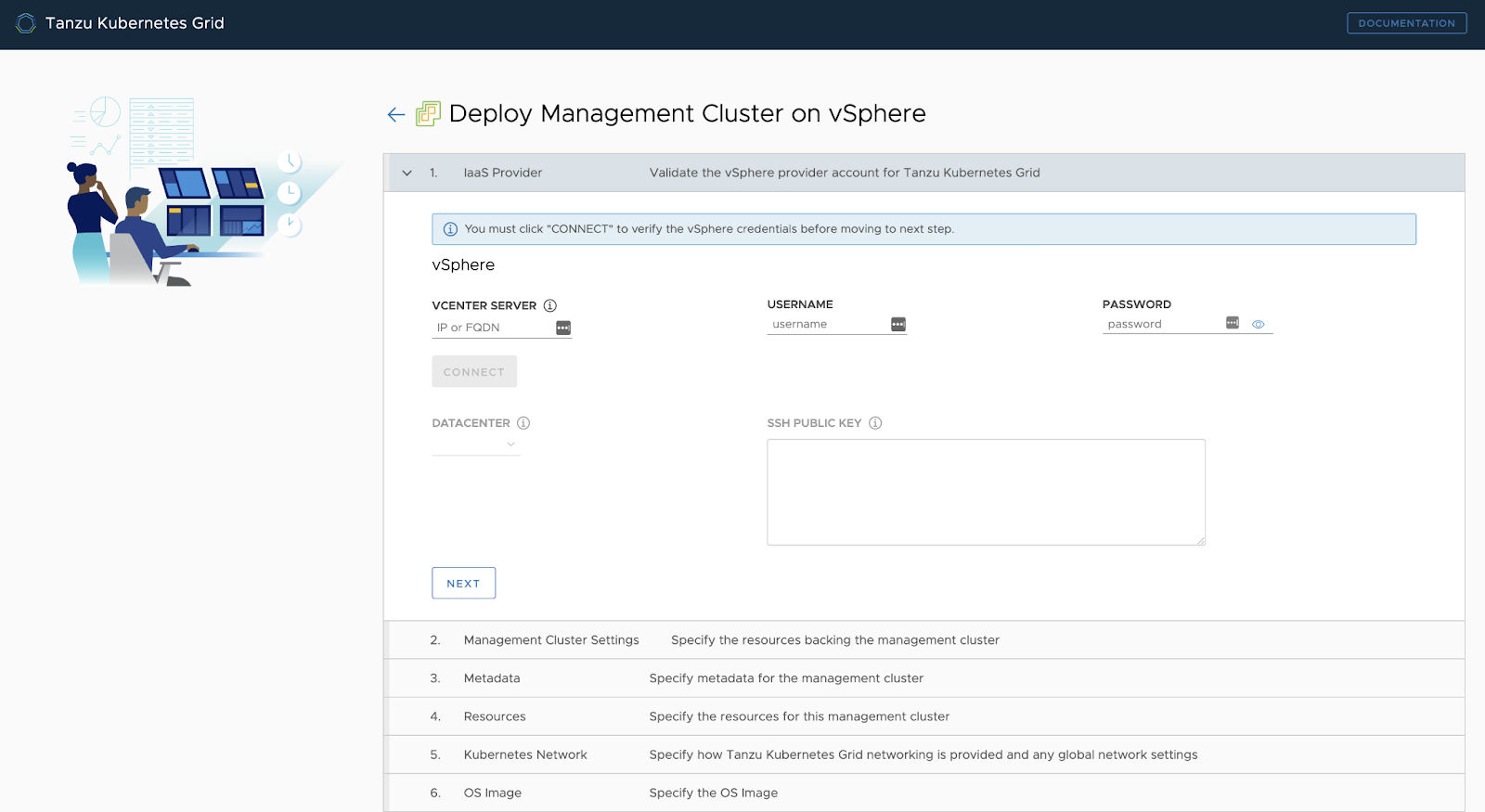

The TKG Installation User Interface shows that, in the current version, it is possible to install TKG on vSphere, AWS EC2 and Microsoft Azure. The UI provides a guided experience tailored to the IaaS, in our case on vSphere.

The TKG installer runs either on an operator’s own machine (it uses Docker) or through a jumphost.

VMware Tanzu at VNG

It is important to make the distinction between vSphere with Tanzu (TKGs) and Tanzu Kubernetes Grid Multicloud (TKGm).

While TKGs and TKGm are separate products in a technical sense, they are both available when licensing Tanzu Editions like Tanzu Basic, Tanzu Standard or Tanzu Advanced. They are different only in management and deployment architecture.

TKGs and TKGm are planned to be merged into one technical solution early next year, containing the union of both feature sets. This will happen through regular upgrades.

vSphere with Tanzu (TKGs)

vSphere with Tanzu (TKGs) is only available on vSphere 7 and is a more integrated experience geared for vSphere Admins. It allows the vSphere admins to setup and manage the environment much more easily

More explanation can be found here.

Since the installation for vSphere with Tanzu (TKGs) is quite straight forward, we will mainly discuss how to install TKGm on vSphere.

Tanzu Kubernetes Grid Multicloud (TKGm)

Tanzu Kubernetes Grid Multicloud (TKGm) is our multi cloud Kubernetes offering that installs all the components (both management and workload clusters) as regular VMs on top of the underlying IAAS provider (vSphere, AWS, Azure, VMC on AWS, ...). More information on TKGm can be found here.

TKG Bill Of Materials

Below are the validated Bill of Materials that can be used to install TKGm in your vSphere environment today:

In the case of vSphere 6.7U3 or higher:

| Software Components | Version |

|-----------------------|---------|

| Tanzu Kubernetes Grid | 1.3.1 |

| VMware vSphere ESXi | 6.7 U3 |

| VMware vCenter (VCSA) | 6.7 U3 |

| VMware vSAN | 6.7 U3 |

Or in the case of vSphere 7U2 or higher:

| Software Components | Version |

|-----------------------|---------|

| Tanzu Kubernetes Grid | 1.3.1 |

| VMware vSphere ESXi | 7.0 U2 |

| VMware vCenter (VCSA) | 7.0 U2 |

| VMware vSAN | 7.0 U2 |

This means we can deploy TKG on either vSphere 6.7 or vSphere 7. We could also deploy TKGm on AWS EC2 instances, on Azure as Compute VMs or on VMC on AWS.

The Interoperability Matrix can be verified at all times here.

Installation Experience

The installation of TKGm on vSphere is done through the same UI as mentioned above, but tailored to a vSphere environment:

You can run this UI locally using the TKG CLI.

Management Cluster

This installation process will take you through the setup of a so-called Management Cluster on your vSphere environment. The Management Cluster is a fully compliant, vanilla Kubernetes cluster that will manage the lifecycle of your Kubernetes workload clusters, through the use of the Cluster API.

The Management Cluster is deployed using regular VMs on your vSphere environment and can be deployed in a separate, management network.

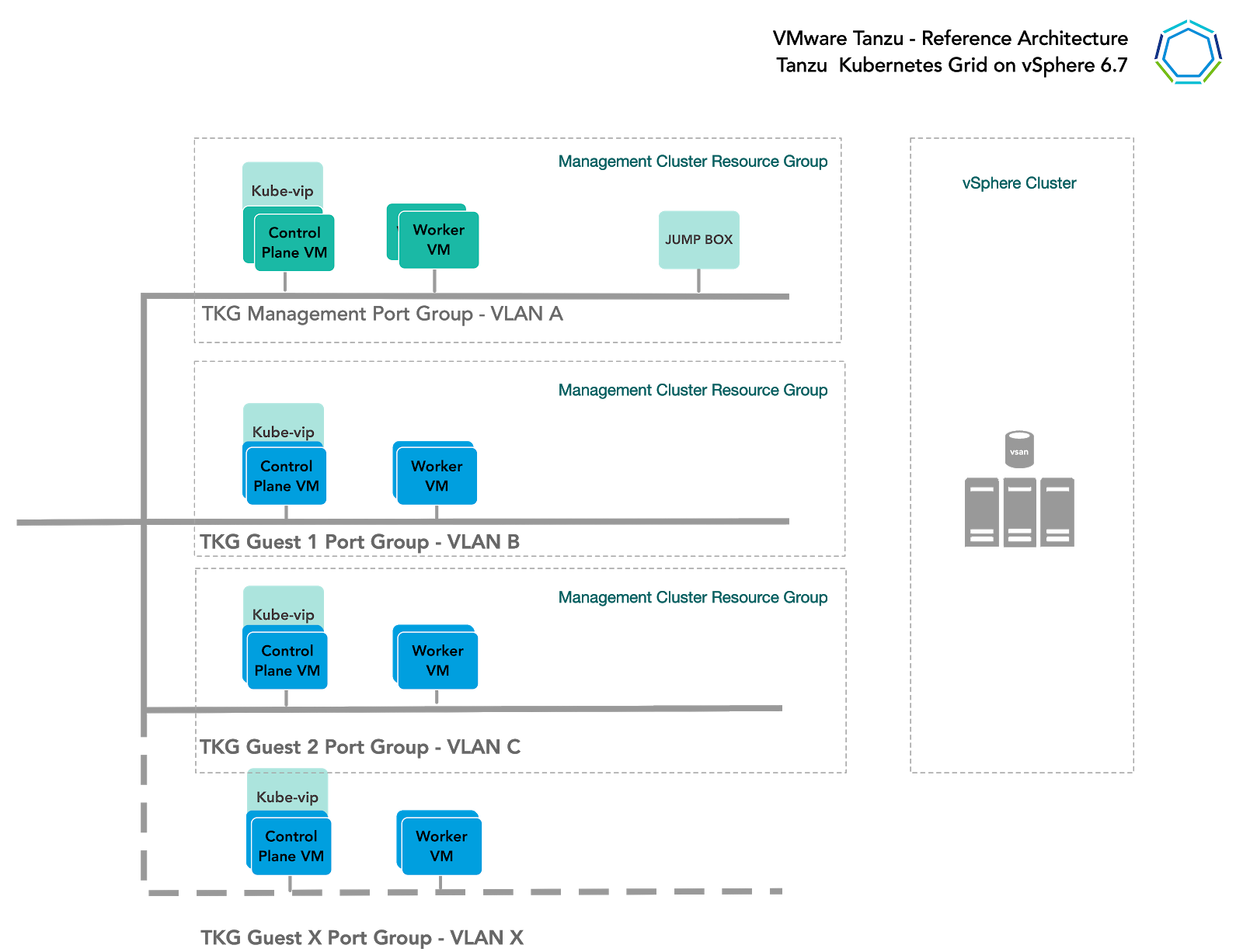

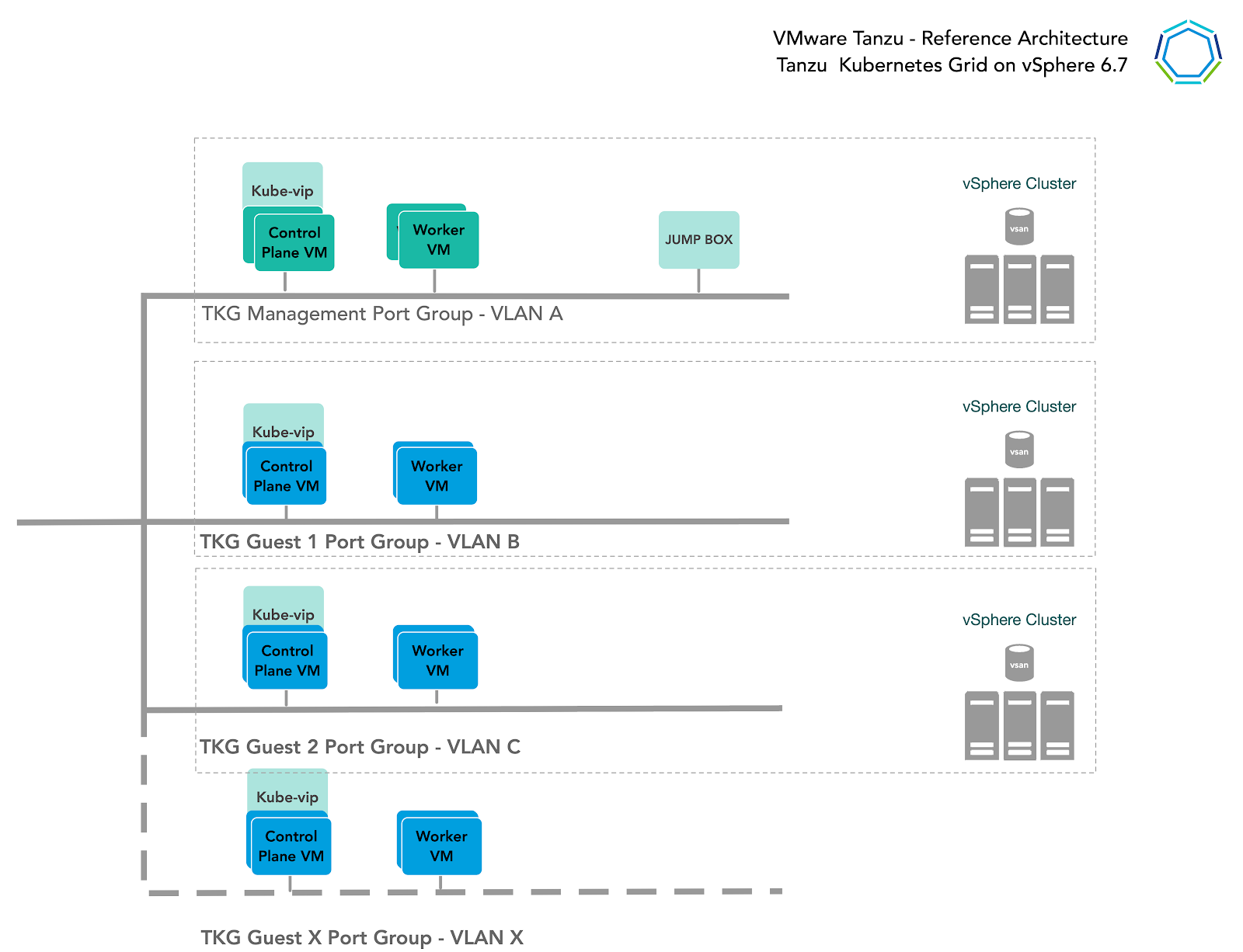

Let’s go over the proposed network setup for this environment.

Network Overview

General Topology

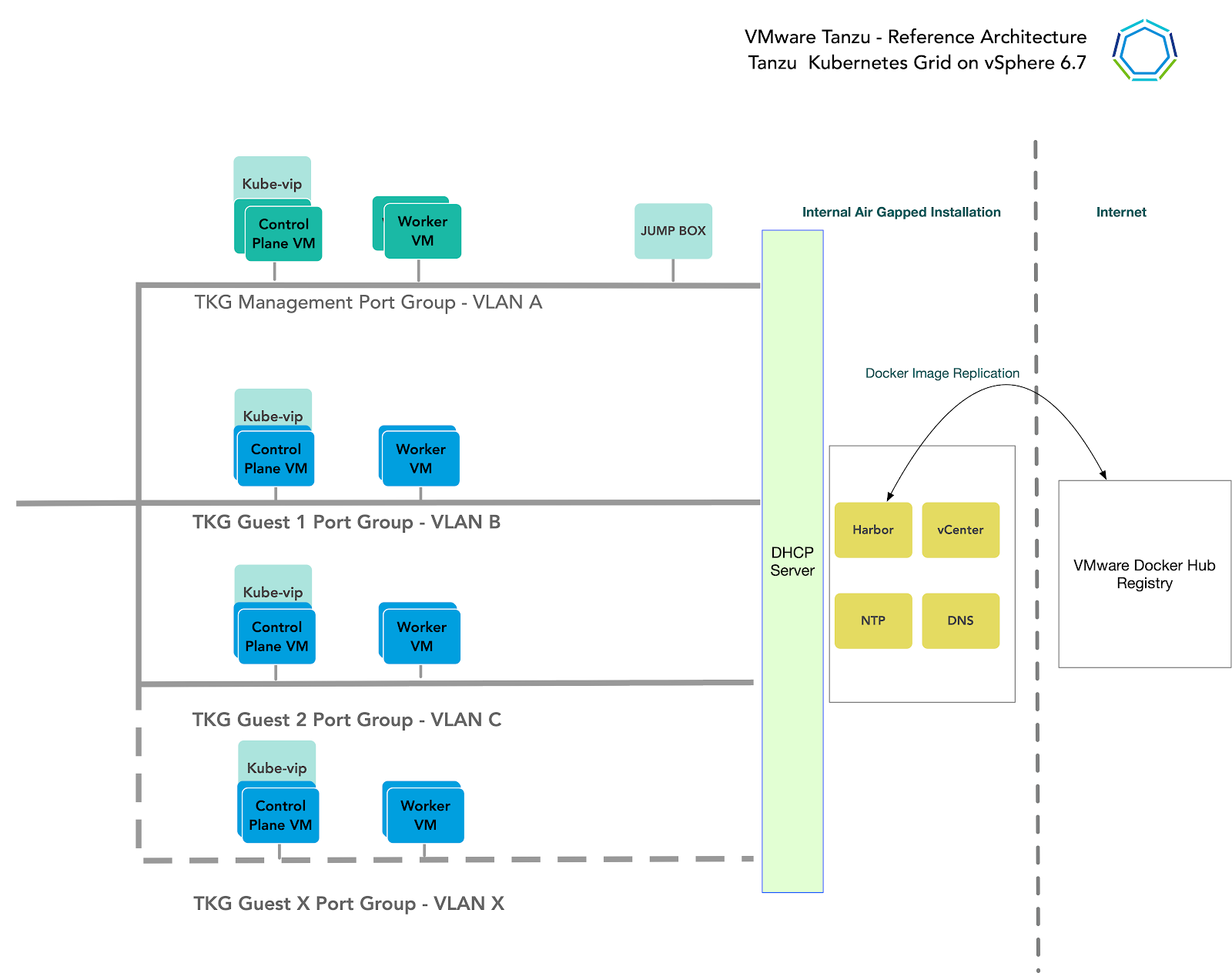

The below design encompasses the generic network architecture for the TKG reference design. For some infrastructure providers, you will find that the networks can be the same subnet or segments, and in other architectures might be entirely separate domains, but each infrastructure provider's networks can be mapped into this general framework. For De Vereniging van Nederlandse Gemeenten (VNG), we want to deploy on vSphere and use different networks (either regular VDS or 3-T based) for the different clusters.

.

.

Network Recommendations

By bootstrapping a Kubernetes management cluster with the TKG CLI tool, TKG is able to manage the lifecycle of multiple Kubernetes workload clusters. In configuring the network around TKG, consider these things:

-

In order to have flexible firewall and security policies, place TKG Management clusters and workload clusters on different networks.

-

The current TKG release does not support static IP assignment for Kubernetes VM components. DHCP is required for each TKG network. It is currently critical for the Kubernetes control-plane to have stable IP assignment, so long lease durations are recommended for production environments.

-

You must allocate at least one static IP in each subnet to assign to the kube-vip. Kube vip is a virtual IP maintained between the control plane cluster nodes for the purpose of exposing the kube-api service.

Firewall Recommendations

To prepare the firewall, you need to gather the following:

-

Management Cluster CIDR

-

Management Cluster kube-vip IP

-

Workload Cluster CIDR

-

Workload Cluster kube-vip IP

-

VMware Harbor registry IP

-

vCenter Server IP

-

DNS server IP(s)

-

NTP Server(s)

| Source | Destination | Protocol:Port | Description |

|--------------------------------------|-----------------------------|---------------|---------------------------------------------------------------------------|

| Workload Cluster Network CIDR | Management Cluster kube-vip | TCP:6443 | Allow workload cluster to register with management |

| Management Cluster CIDR | Workload Cluster kube-vip | TCP:6443,5556 | Allow management cluster to configure workload cluster |

| Management and Workload Cluster CIDR | Harbor IP | TCP:443 | Allow components to retrieve container images |

| Management and Workload Cluster CIDR | vCenter IP | TCP:443 | Allows components to access vCenter to create VMs and Storage Volumes |

| Management and Workload Cluster CIDR | DNS Servers | UDP:53 | |

| Management and Workload Cluster CIDR | NTP Servers | UDP:123 | |

| Any application dependent services | N/A | N/A | Allow applications reach to dependent services, e.g. an external database |

Container Networking

We support Antrea or Calico as CNI implementations. Both are opensource and supported, but Antrea is the default, built by VMware and is much more powerful in its architecture and layered setup.

Ingress and Load Balancing

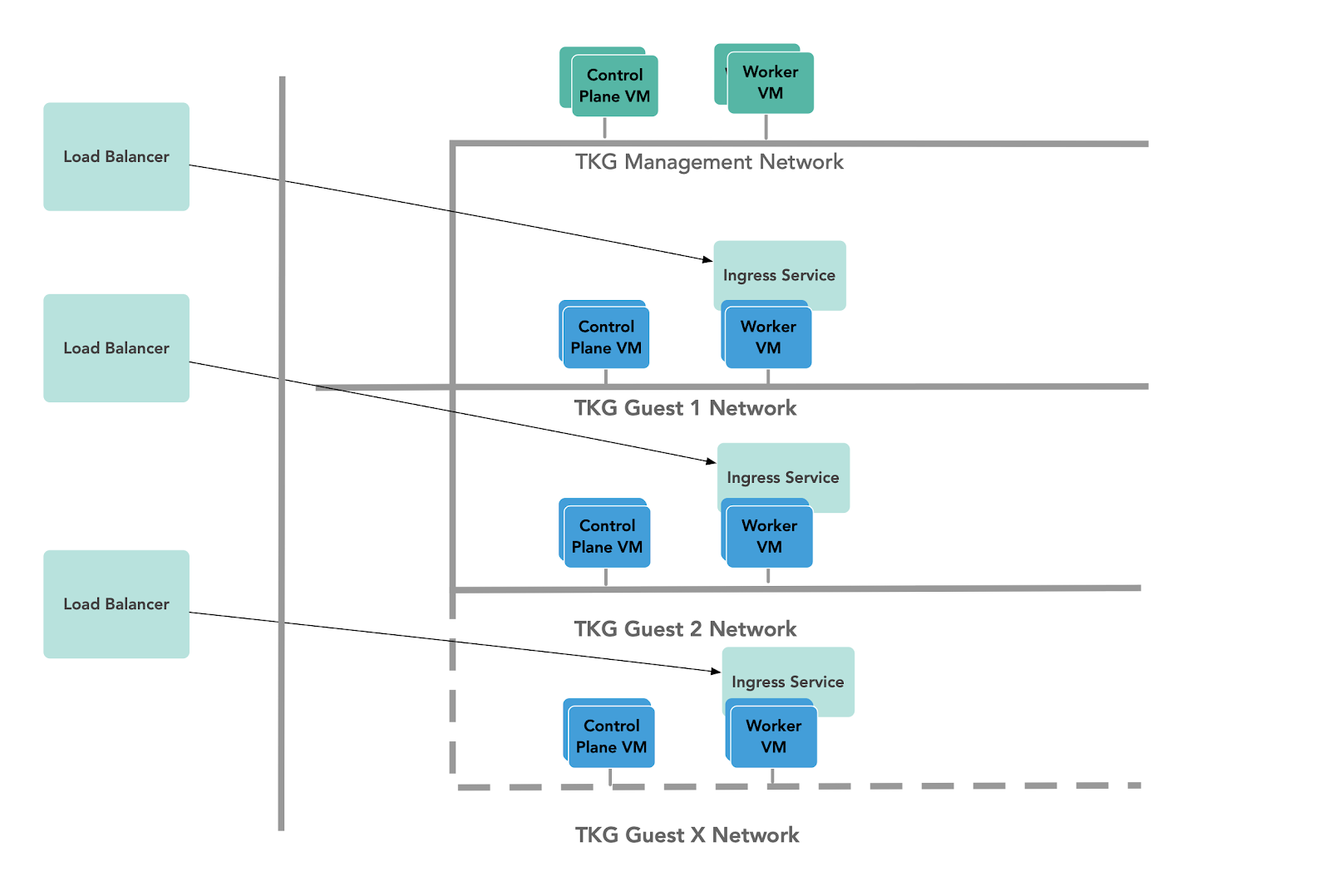

Tanzu Advanced comes with the ability to provision two different load balancer types:

-

NSX-T Load Balancer, using vSphere with Tanzu with NSX-T. Note that NSX-T itself is not included in the Tanzu Editions and so must be purchased and installed separately.

-

VMware NSX Advanced Load Balancer (acquired through Avi Networks).

-

HAProxy as an opensource load balancing alternative is also supported.

When enabling vSphere with Tanzu and NSX-T is available, NSX-T Load Balancing is the default configuration and provides good performance characteristics. If you are deploying on Virtual Distributed Switches without NSX-T, you can utilise the VMware NSX Advanced Load Balancer on your cluster to provide L4-L7 load balancing for your Kubernetes workloads:

The Tanzu Editions also includes Contour for Kubernetes Ingress routing.

Contour will be exposed by a load balancer, and provide Layer 7 based routing to your Kubernetes services.

Storage

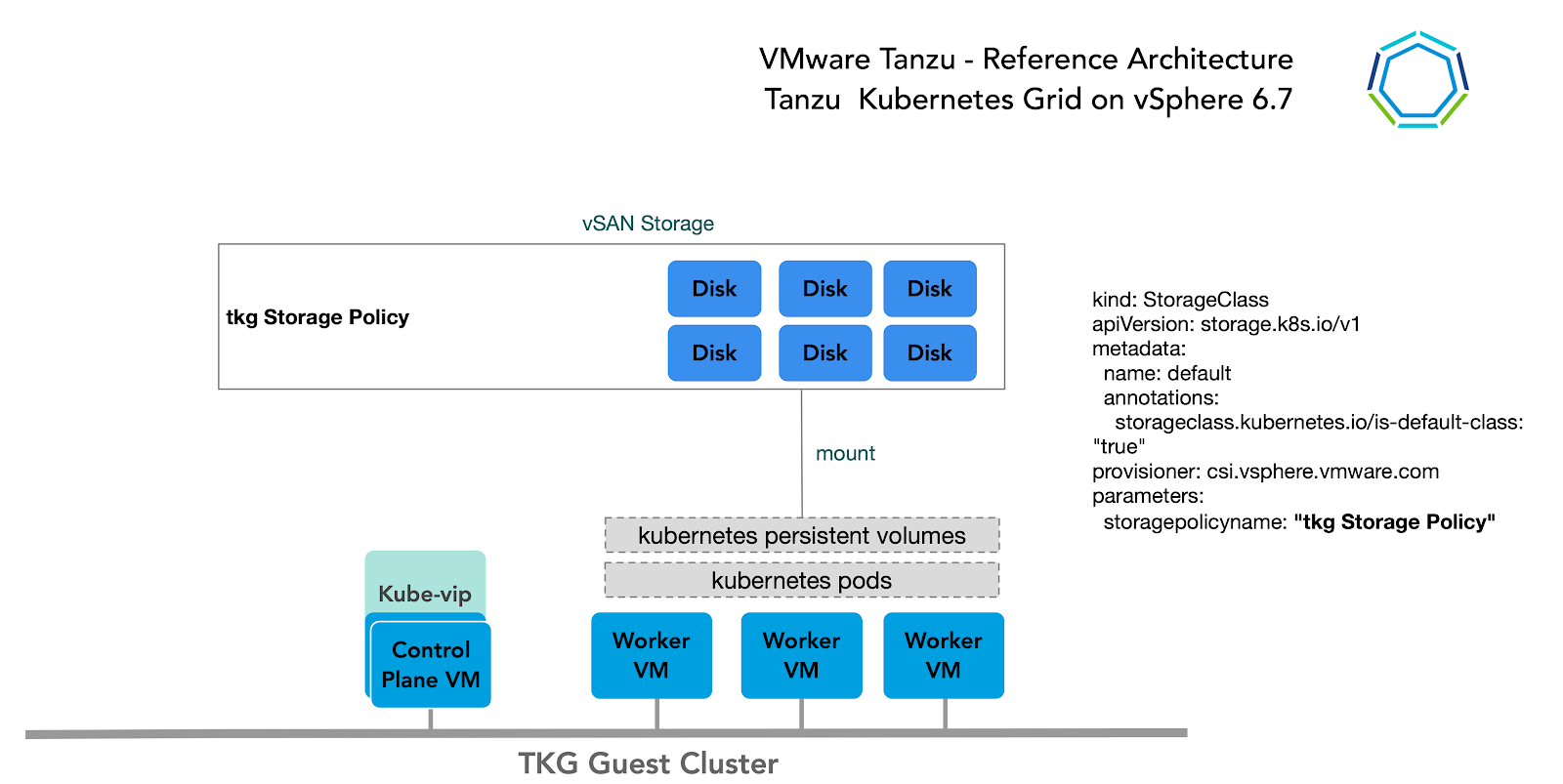

Many storage options are available and Kubernetes is agnostic about which option you choose.

For Kubernetes stateful workloads, TKG installs the vSphere Container Storage interface (vSphere CSI) to provision Kubernetes persistent volumes for pods automatically. While the default vSAN storage policy can be used, site reliability engineers (SREs) and administrators should evaluate the needs of their applications and craft a specific vSphere Storage Policy. vSAN storage policies describe classes of storage (e.g. SSD, NVME, etc.) along with quotas for your clusters.

In vSphere 7u1+ environments with vSAN, the vSphere CSI driver for Kubernetes also supports creation of NFS File Volumes which support ReadWriteMany access modes. This allows for provisioning volumes which can be read & written from multiple pods simultaneously. To support this, the vSAN File Service must be enabled.

For Vereniging van Nederlandse Gemeenten (VNG), using any other type of vSphere Datastores is also possible. There are so called TKG Cluster Plans that the operators can define to use a certain vSphere Datastore when creating new workload clusters. All developers would then have the ability to provision container-backed persistent volumes from that underlying datastore.

Clustering on vSphere

Single Cluster

Starting with a single vSphere cluster, management and workload Kubernetes clusters can be separated into different vSphere resource pools. Using a resource pool lets you manage each Kubernetes cluster’s CPU and memory limitations and reservations, however it does not separate elements on the physical layer.

This approach is ideal for functional trials, proofs-of-concepts, or production application deployments that do not require hardware separation.

Multi-clusters

For more physical separation of application workloads on Kubernetes, operators can deploy separate Kubernetes clusters to independent vSphere clusters and gain physical layer separation. For example, a Kubernetes cluster with intense computing workloads can leverage hosts with high performance CPU, while extreme IO workload clusters can be placed onto hosts with high performance storage.

This also applies to the management cluster, for compute separation between management and workloads.

High Availability

The current TKG release relies heavily on existing vSphere features for mitigating common availability disruptions, such as single-host hardware failure. In this scenario, ensuring vSphere HA is enabled will allow VMs on failed hardware to be automatically restarted on surviving hosts.

The TKG management cluster performs Machine Health Checks on all Kubernetes worker VMs. This ensures workloads remain in a functional state, and can remediate issues like:

-

Worker VM accidentally deleted or corrupted

-

Kubelet process on worker VM accidentally stopped or corrupted

This healthcheck aims to ensure your worker capacity remains stable & schedulable for workloads. This healthcheck, however, does not apply to control-plane VMs deployed, and also will not recreate VMs due to physical host failure. vSphere HA and Machine Health Checks interoperably work together to enhance workload resilience.

Enabling Fully Automated DRS is also recommended to continuously ensure cluster load is evenly spread over all hosts. This indirectly helps reduce vSphere HA recovery time as no single host would be overloaded and thus have longer recovery times due to more or larger VMs to restart on surviving hosts. These are the recommended vSphere HA settings:

Non-Stretched vSphere clusters

We propose for De Vereniging van Nederlandse Gemeenten to deploy TKG on non-stretched vSphere clusters until VMware can deliver official support on a stretched cluster topology. Although stretched cluster topology is already possible, the TKG layer is unaware of this and will provision VMs randomly across the two sites. This would mean that, when the VMs are placed in an unlucky way, you would experience downtimes during a site failure. There is a way to run the workloads in an active passive way by putting the hosts on the second site in maintenance mode, and perform DRS on site failure, but this would equally cause downtime. As mentioned, these setups are not fully supported yet so not advised for production. Support for stretched cluster setups is expected in Q3 of 2021.

Full HA topologies mapping different physical sites to Kubernetes Availability Zones is planned for Q1 2022.

Container Registry

The Tanzu Editions include Harbor as a container registry. Harbor provides a location for pushing, pulling, storing and scanning container images to be used in your Kubernetes clusters. There are three main supported installation methods for Harbor:

-

TKG Extension deployment to a TKG cluster -- this installation method is recommended for general use cases.

-

Helm-based deployment to a Kubernetes cluster - this installation method may be preferred for customers already invested in Helm.

-

VM-based deployment using docker-compose - this installation method is recommended in cases where TKG is being installed in an air gapped or Internet-less environment and no pre-existing Kubernetes clusters exist on which to install Harbor. When Kubernetes is already available, the Helm based deployment also works for air gapped environments.

If deploying Harbor without a publicly signed certificate, be sure to follow the instructions for including the Harbor root CA in your TKG clusters.

Tanzu Mission Control

Attaching clusters into Tanzu Mission Control (TMC) provides a centralised administrative interface that enables you to manage your global portfolio of Kubernetes clusters. TMC can assist you with:

-

Centralized Lifecycle Management - managing the creation and deletion of workload clusters using registered management clusters.

-

Centralized Monitoring - viewing the inventory of clusters and the health of clusters and their components.

-

Authorization - Centralized authentication and authorization, with federated identity from multiple sources (eg. AD, LDAP or SAML), plus an easy-to-use policy engine for granting the right access to the right users across teams.

-

Compliance - enforcing all clusters to have the same set of policies applied.

-

Data protection - using Velero through TMC to verify your workloads and persistent volumes are being backed up.

Observability

Metrics on premises

Tanzu Standard Edition includes observability with Prometheus and Grafana extensions. The extensions can be installed in an automated way by applying the YAML in the extensions folder as documented here. Grafana provides a way to view cluster metrics as shown in the two screen-shots below:

Figure 13 - TKG Cluster metrics in Grafana

Figure 13 - TKG Cluster metrics in Grafana

Metrics in Tanzu Observability

Through Tanzu Advanced, observability can be significantly enhanced by using VMware Tanzu Observability by Wavefront. It is a multi-tenant SaaS application by VMware, which is used to collect and display metrics and trace data from the full stack platform as well as from applications. The service provides the ability to create alerts tuned by advanced analytics, assist in the troubleshooting of systems and to understand the impact of running production code.

In the case of vSphere and TKG, Tanzu Observability is used to collect data from components in vSphere, from Kubernetes, and from applications running on top of Kubernetes.

You can configure Tanzu Observability with an array of capabilities. Here are the recommended plugins for this design:

| Plugin | Purpose | Key Metrics | Example Metrics |

|----------------------------------|---------------------------------------------------|-------------------------------------------------------|---------------------------------------------------|

| Telegraf for vSphere | Collect metrics from vSphere | ESXi Server and VM performance & resource utilization | vSphere VM, Memory and Disk usage and performance |

| Wavefront Kubernetes Integration | Collect metrics from Kubernetes clusters and pods | Kubernetes container and POD statistics | POD CPU usage rate Daemonset ready stats

Tanzu Observability can display metric data from the full stack of application elements from the platform (VMware ESXi servers), to the virtual environment, to the application environment (Kubernetes) and down to the various components of an application (APM).

There are over 120 integrations with prebuilt dashboards available in Wavefront. More are being added each week.

Logging

The Tanzu Editions also include Fluentbit for integration with logging platforms such as vRealize LogInsight, Elasticsearch, Splunk or other logging solutions. Details on configuring Fluentbit to your logging provider can be found in the documentation here.

The Fluentbit Daemonset is installed automatically as an extension on all TKG clusters, but each TKG cluster can be configured differently, if desired.

vRealize Log Insight (vRLI) provides real-time log management and log analysis with machine learning based intelligent grouping, high-performance searching, and troubleshooting across physical, virtual, and cloud environments. vRLI already has a deep integration with the vSphere platform where you can get key actionable insights, and it can be extended to include the cloud native stack as well.

The vRealize Log Insight appliance is available as a separate on premises deployable product. You can also choose to go with the SaaS version vRealize Log Insight Cloud.

Summary

TKG on vSphere on hyper-converged hardware offers high-performance potential, convenience, and addresses the challenges of creating, testing, and updating on-premise Kubernetes platforms in a consolidated production environment. This validated approach will result in a near-production quality installation with all the application services needed to serve combined or uniquely separated workload types via a combined infrastructure solution.

This plan meets many Day 0 needs for quickly aligning product capabilities to full stack infrastructure, including networking, firewalls, load balancing, workload compute alignment and other capabilities.

Observability is quickly established and easily consumed with Tanzu Observability.

Aan de slag met Haven?

In onze technische documentatie wordt de standaard toegelicht en beschreven hoe u Haven kunt installeren op uw huidige IT infrastructuur. Bovendien hebben we een handreiking programma van eisen beschikbaar gesteld om het inkopen van Haven te vereenvoudigen. Of neem contact met ons op, we helpen u graag op weg!